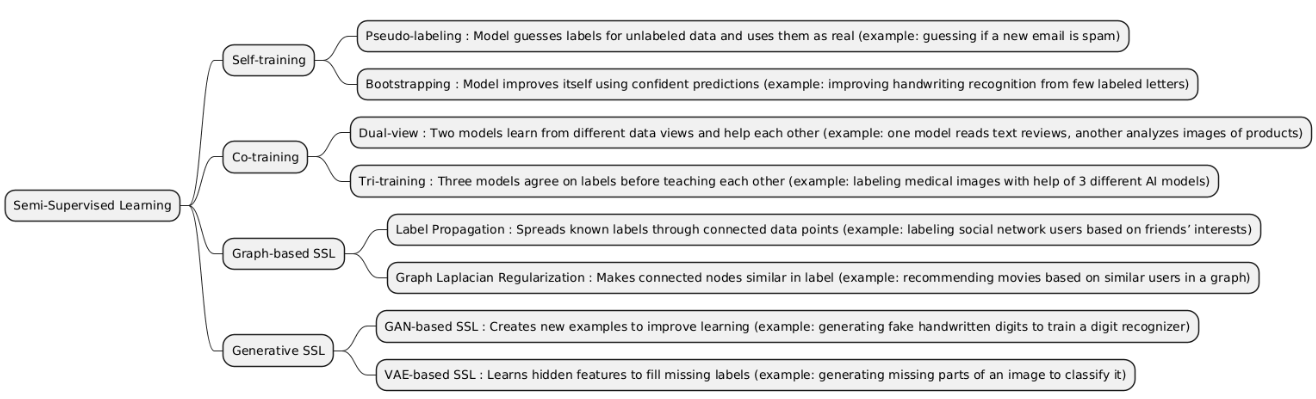

Semi-Supervised Learning is a type of machine learning that combines a small amount of labeled data with a large amount of unlabeled data. This approach helps the model learn more effectively than using only labeled data, making it useful when labeling data is expensive or time-consuming.

| Type | What it is | When it is used | When it is preferred over other types | When it is not recommended | Examples of projects that is better use it incide him |

|---|---|---|---|---|---|

| Self-Training | Self-Training is method where a model is first trained on a small labeled dataset, then it predicts labels for unlabeled data. The most confident predictions are added to the labeled set iteratively to improve the model. |

• When you have a small labeled dataset and a large unlabeled dataset. • When labeling data is expensive or time-consuming. • Useful for text classification, image recognition, and speech tasks. |

• Better than Co-Training when you have only one view or feature set. • Better than Graph-based Semi-Supervised Learning when graph structure is unavailable. • Better than Generative Semi-Supervised Learning when you prefer a simpler, iterative approach without complex generative models. |

• When predictions are not reliable — wrong labels may propagate errors. • When you have multiple complementary feature views — Co-Training is better. • When data naturally forms a graph or network structure — Graph-based methods perform better. • When you can train a generative model easily — Generative SSL may give more robust results. |

• Text classification with few labeled emails and many unlabeled emails. • Image recognition with a small labeled image set and large unlabeled dataset. • Speech recognition using a few transcribed audio samples and many unlabeled audio files. • Medical diagnosis with a few labeled patient records and many unlabeled records. |

| Co-Training | Co-Training is a method where two separate models are trained on different feature sets (views) of the same data. Each model labels unlabeled data for the other, iteratively improving both models. |

• When you have two or more complementary feature sets for the same data. • When labeled data is scarce but unlabeled data is abundant. • Common in web page classification, multimedia analysis, and text mining. |

• Better than Self-Training when you have multiple independent views — reduces error propagation. • Better than Graph-based SSL when graph structure is unknown or hard to construct. • Better than Generative SSL when you prefer a simpler iterative method without complex generative models. |

• When there is only one feature set — Co-Training cannot be applied. • When views are not independent or weakly correlated — models may reinforce errors. • When you can leverage graph structures — Graph-based SSL may be more effective. • When you can train generative models easily — Generative SSL may give better performance. |

• Web page classification using text content and hyperlink structure as separate views. • Email spam detection using email body and metadata. • Multimedia classification using image features and audio features. |

| Graph-based Semi-Supervised Learning | Graph-based SSL represents data as a graph, where nodes are samples and edges represent similarity or relationships. Labels propagate from labeled nodes to unlabeled nodes through the graph structure. |

• When data naturally forms a graph or network structure (e.g., social networks, citation networks). • When you have few labeled nodes and many unlabeled nodes connected via similarity. • Common in node classification, link prediction, and network analysis. |

• Better than Self-Training when data relationships are crucial, not just iterative labeling. • Better than Co-Training when you don’t have multiple independent feature sets but have graph connectivity. • Better than Generative SSL when you want direct label propagation rather than building a generative model. • Ideal for networked or relational datasets. |

• When data cannot be represented as a meaningful graph. • When only one small feature set is available and connectivity is weak — Self-Training may suffice. • When building graph is computationally expensive for very large datasets. • When a generative approach is more natural or effective. |

• Node classification in a citation network (classify papers by topic). • Fraud detection in transaction networks. • Social network analysis (predict user interests based on friends). • Protein-protein interaction prediction in bioinformatics. |

| Generative Semi-Supervised Learning | Generative Semi-Supervised Learning uses a generative model to learn the joint distribution of inputs and labels. It can generate synthetic data and assign labels to unlabeled data to improve learning. |

• When you have few labeled samples and a large amount of unlabeled data. • When modeling the data distribution can help improve classification. • Common in image generation, text generation, and speech tasks. |

• Better than Self-Training when you want a probabilistic model that captures data distribution. • Better than Co-Training when multiple views are unavailable. • Better than Graph-based SSL when data is not naturally a graph. • Ideal when generative modeling is feasible and can improve semi-supervised learning. |

• When generating a good generative model is difficult. • When data has complex structure or high dimensionality that is hard to model. • When simpler methods (Self-Training or Co-Training) suffice. • When the dataset is small and building a generative model may overfit. |

• Semi-supervised image classification using a Variational Autoencoder (VAE). • Text classification with few labeled texts and a generative language model. • Speech recognition using generative models to label unlabeled audio. |

import numpy as np

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# 1️⃣ Create a small labeled dataset

X, y = make_classification(n_samples=200, n_features=5, n_informative=3, n_classes=2, random_state=42)

X_labeled, X_unlabeled, y_labeled, _ = train_test_split(X, y, test_size=0.7, random_state=42) # 30% labeled, 70% unlabeled

# 2️⃣ Train initial model on labeled data

model = RandomForestClassifier()

model.fit(X_labeled, y_labeled)

# 3️⃣ Predict pseudo-labels for unlabeled data

pseudo_labels = model.predict(X_unlabeled)

# 4️⃣ Combine labeled data + pseudo-labeled data

X_combined = np.vstack((X_labeled, X_unlabeled))

y_combined = np.hstack((y_labeled, pseudo_labels))

# 5️⃣ Retrain model on combined dataset

model.fit(X_combined, y_combined)

# 6️⃣ Evaluate model (on all data, just for demonstration)

y_pred = model.predict(X)

accuracy = accuracy_score(y, y_pred)

print("Accuracy after pseudo-labeling:", accuracy)

import numpy as np

from sklearn.datasets import make_classification

from sklearn.semi_supervised import LabelPropagation

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# 1️⃣ Create dataset

X, y = make_classification(n_samples=200, n_features=5, n_informative=3, n_classes=2, random_state=42)

# 2️⃣ Split into labeled and unlabeled data

X_labeled, X_unlabeled, y_labeled, y_unlabeled = train_test_split(X, y, test_size=0.7, random_state=42)

y_unlabeled[:] = -1 # Mark unlabeled points with -1 as required by LabelPropagation

# 3️⃣ Combine labeled and unlabeled labels

y_combined = np.hstack((y_labeled, y_unlabeled))

X_combined = np.vstack((X_labeled, X_unlabeled))

# 4️⃣ Initialize Label Propagation model

label_prop_model = LabelPropagation()

label_prop_model.fit(X_combined, y_combined)

# 5️⃣ Predict labels for all data

y_pred = label_prop_model.transduction_

# 6️⃣ Evaluate on original labels

accuracy = accuracy_score(y, y_pred)

print("Accuracy after Label Propagation:", accuracy)